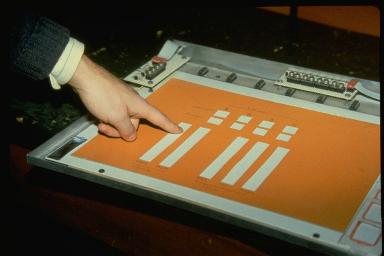

Figure 1: Virtual Devices in the Macintosh Control Panel

WINDOWS ON TABLETS AS A MEANS OF ACHIEVING VIRTUAL INPUT DEVICES

Ed BROWN, William A.S. BUXTON and Kevin MURTAGHComputer Systems Research Institute,

University of Toronto,

Toronto, Ontario,

Canada M5S 1A4

Users of computer systems are often constrained by the limited number of physical devices at their disposal. For displays, window systems have proven an effective way of addressing this problem. As commonly used, a window system partitions a single physical display into a number of different virtual displays. It is our objective to demonstrate that the model is also useful when applied to input.

We show how the surface of a single input device, a tablet, can be partitioned into a number of virtual input devices. The demonstration makes a number of important points. First, it demonstrates that such usage can improve the power and flexibility of the user interfaces that we can implement with a given set of resources. Second, it demonstrates a property of tablets that distinguishes them from other input devices, such as mice. Third, it shows how the technique can be particularly effective when implemented using a touch sensitive tablet. And finally, it describes the implementation of a prototype an "input window manager" that greatly facilitates our ability to develop user interfaces using the technique.

The research described has significant implications on direct manipulation interfaces, rapid prototyping, tailorability, and user interface management systems.

A significant trend in user interface design is away from the discrete, serial nature of what we might call a digital approach, towards the continuous, spatial properties of an analogue approach.

Direct Manipulation systems are a good example of this trend. With

such systems, controls and functions (such as scroll bars, buttons, switches

and potentiometers) are represented as graphical objects which can be thought

of as virtual devices. A number of these are illustrated in Fig. 1.

The impression is that of a number of distinct devices, each with its

own specialized function, and occupying its own dedicated space. While

powerful, the impression is an illusion, since virtually all interactions

with these devices is via only one or two physical devices: the keyboard

and the mouse.

Figure 1: Virtual Devices in the Macintosh Control Panel

The figure shows graphical objects such as potentiometers, radio buttons and icons. Each functions as a distinct device. Interaction, however, is via one of two physical devices: the mouse or keyboard.

The strength of the illusion, however, speaks well for its effectiveness.

Nevertheless, this paper is rooted in a belief that direct manipulation

systems can be improved by expanding the design space to better afford

turning this illusion into reality. Distinct controls for specific functions,

provide the potential to improve the directness of the user's access (such

as through decreased homing time and exploiting motor memory). Input functions

are moved from the display to the work surface, thereby freeing up valuable

screen real-estate. Because they are dedicated, physical controls can be

specialized to a particular function, thereby providing the possibility

to improve the quality of the manipulation .

While one may agree with the general concepts being expressed, things generally break down when we try to put these ideas into practice. Given the number of different functions and virtual devices that are found in typical direct manipulation systems, having a separate physical controller for each would generally be unmanageable. Our desks (which are already crowded) would begin to look like an aircraft cockpit or a percussionist's studio. Clearly, the designer must be selective in what functions are assigned to dedicated controllers. But even then, the practical management of the resources remains a problem.

The contribution of the current research is to describe a way in which

this approach to designing the control structures can be supported. To

avoid the explosion of input transducers, we introduce the notion of virtual

input devices that are spatially distinct. We do so by partitioning the

surface of one physical device into a number of separate regions, each

of which emulates the function of a separate controller. This is analogous

on the input side to windows on displays.

We highlight the properties that are required of the input technology

to support such windows, and discuss why certain types of touch tablets

are particularly suited for this type of interaction.

Finally, we discuss the functionality that would be required by a user interface management system to support the approach. We do so by describing the implementation of a working prototype system.

The idea of virtual devices is not new. One of the most innovative approaches was the virtual keyboard developed by Ken Knowlton (1975, 1977a,b) at Bell Laboratories. Knowlton developed a system using half-silvered mirrors to permit the functionality of keyboards to be dynamically reconfigured. Partitioning a tablet surface into regions is also not new. Tablet mounted menus, as seen in many CAD systems, are one example of existing practice.

Our contribution:

Hence digitizing tablets will work, but mice, trackballs, and joysticks will not. Within the class of devices which meet these two criteria (including light pens, graphics tablets, touch screens), touch technologies (and especially touch tablets) have noteworthy potential.

Control systems that employ multiple input devices generally have two important properties:

Simultaneous access is also important in many situations. Within the domain of human-computer interaction, for example, Buxton and Myers (1986) demonstrate benefits in tasks similar to those demanded in text editing and CAD.

The primary attribute of touch technologies that affords eyes-free operation is their having no intermediate hand-held transducer (such as a stylus or puck). Sensing is with the finger. Consequently, physical templates can be placed over a touch tablet (as illustrated in Fig. 2) and provide the same type of kinesthetic feedback that one obtains from the frets on a guitar or the cracks between the keys of a piano. This was demonstrated in Buxton, Hill and Rowley (1985). Because of the ability to memorize the position of virtual devices and sense their boundaries, usage is very different than that where a stylus is used, or where the virtual devices are delimited on the tablet surface graphically, and cannot be felt.

An interesting result from our studies, however, is the degree to which eyes-free control can be exercised on a touch tablet which is partitioned into a number of virtual devices, but which has no graphical or physical templates on the tablet surface.

Figure 2: Using a template with a touch tablet

A cut-out template is being placed over a touch tablet. Each cut-out represents a different virtual device on a prototype operating console. The user can operate each device "eyes-free" since boundaries of the virtual devices can be felt (due to the raised edges of the template). If the tablet can sense more than one point of contact at a time, multiple virtual devices can be operated at once. (From Buxton, Hill, & Rowley, 1985).

A touch tablet of this size has the important property that it is on the same spatial scale as the hand. Therefore, control and access over its surface falls within the bounds of the relatively highly developed fine motor skills of the fingers, even if the palm is resting in a fixed (home?) position.Using a 3"x3" touch sensitive touch tablet (shown in Fig. 3), our informal experience suggests that with very little training users can easily discriminate regions to a resolution of up to 1/3 of the tablet surface's vertical or horizontal dimensions. Thus, one can implement three virtual linear potentiometers by dividing the surface into three uniform sized rows or columns, or, for example, one can implement nine virtual push-button switches by partitioning the tablet surface into a 3x3 matrix.

If the surface is divided into smaller regions, such as a 4x4 grid, the result will be significantly more errors, and longer learning time. In such cases, using the virtual devices will require visual attention. The desired eyes-free operability is lost.

These limits are illustrated in Fig. 4. For example, we see that nine buttons for playing tick-tack-toe can work rather well, while a sixteen button numerical button keypad does not. Similarly, three virtual linear faders to control Hue, Saturation and Value work, while four such potentiometers do not.

Our belief is that the performance that we are observing is due to the size of the tablet as it relates to the size of the hand, and the degree of fine motor skills developed in the hand by virtue of everyday living. Being sensitive to these limits is very important as we shall see later when we discuss "dynamic windows." Because of this importance, these limits of motor control warrant more formal study.[1]

(a )

(b)

(c)

(d)

Figure 4: Grids on Touch Tablets

Four mappings of virtual devices are made onto a touch tablet. In (a) and (c), the regions represent linear potentiometers. The surface is partitioned into 3 and 4 regions, respectively. In (b) and (d) the surface is partitioned into a matrix of push buttons (3x3 and 4x4, respectively). Using a 3"x3" touch tablet without templates, our informal experience is that users can resolve virtual devices relatively easily, eyes-free, when the tablet is divided into up to 3 regions in either or both dimensions. This is the situation illustrated in (a) and (b). However, resolving virtual devices where the surface is more finely divided, as in (c) and (d), presents considerably more load. Eyes-free operation requires far more training, and errors are more frequent. The limits on this discrimination warrant more formal study.

Finally, there is the issue of parallel access. Touch technologies

have the potential to support multiple virtual devices simultaneously.

Again, this is largely by virtue of their not demanding any hand-held intermediate

transducer. If, for example, I am holding a stylus in my hand, the affordances

of the device bias my expectations towards wanting to draw only one line

at a time. In contrast, if I were using finger paints, I would have no

such restrictive expectations.

A similar effect is at play in interacting with virtual devices implemented on touch tablets. Consider the template shown in Fig. 2. Nothing biases the user against operating more than one of the virtual linear potentiometers at a time. In fact, experience in the everyday world of such potentiometers would lead one to expect this to be allowed. Consequently, if it is not allowed, the designer must pay particular attention to avoiding probable errors that would result from this false expectation.

Being able to activate more than one virtual device at a time opens up a new possibilities in control and prototyping. The mock up of instrument control consoles is just one example. The biggest obstacle restricting the exploitation of this potential is the lack of a touch tablet that is capable of sensing multiple points. However, Lee, Buxton and Smith (1985) have demonstrated a working prototype of such a transducer, and it is hoped that the applications described in this current paper will help stimulate more activity in this direction.

In summary, we have seen that position sensitive planar devices readily support spatially distinct virtual input devices. Further, we have seen that touch technologies, and touch tablets in particular, have affordances which are particularly well suited to this type of interaction. Finally, it has been shown that a touch tablet capable of sensing more than one point of contact at a time would enable the simultaneous operation of multiple virtual devices.

In our approach, the data from each virtual device is transmitted to the application as if it were coming from an independent physical device with its own driver. If the region is a button device, its driver transmits state changes. If it is a 1-D relative valuator, it transmits one dimension of relative data in stream mode. All of this is accomplished by placing a "window manager" between the device driver for the sensing transducer and the application.[2 ] Hence, applications can be constructed independent of how the virtual devices are implemented, thereby maintaining all of the desired properties of device independence. Furthermore, this is accomplished with a uniform set of tools that allows one to define the various regions and the operational behaviour of each region.

Window managers for displays can support the dynamic creation, manipulation, and destruction of windows. Is it reasonable to consider comparable functionality for input windows?

Our research (Buxton, Hill & Rowley, 1985) has demonstrated that under certain circumstances, the mapping of virtual devices onto the tablet surface can be dynamically altered. For example, in a paint system, the tablet may be a 2D pointing device in one context, and in another (such as when mixing colours) may have three linear potentiometers mapped onto it.

Changing the mapping of virtual devices onto the tablet surface restricts or precludes the use of physical templates. However, this is not always a problem. If visual (but not tactile) feedback is required, then a touch sensitive flat panel display can provide graphical feedback as to the current mapping. This is standard practice in many touch screen "soft machine" systems.

As has already been discussed, under certain circumstances, some touch tablets can be used effectively without physical or graphical templates. This can be illustrated using a paint mixing example. Since there are three components to colour, three linear potentiometers are used. As in Fig. 4(b), the potentiometers are vertically oriented so that there is no confusion: up is increase, down is decrease. The potentiometers are, left-to-right, Hue, Saturation, and Value (H, S & V in the figure). This ordering is consistent with the conventional order in speech, consequently there is little or no confusion for the user.

The example illustrates three conditions for using virtual devices without templates:

We have developed an input window manager (IWM). The tool consists of a "meta device" that provides for quick specification of the layout and behavior of the virtual devices. The specified configuration functions independent of the application. Users employ a gesture-based trainer to "show" the system the location and type of virtual device being specified. Hence, for example, adding a new template involves little more than tracing its outline on the control surface, defining the virtual device types and ranges, and attaching them to application parameters. Since the implementation of new devices can be achieved as quickly as they can be laid out on the tablet, this tool provides a new dimension of system tailorability.

In order to support iterative development, the tool should allow the user to suspend the application program, change the input configuration (by invoking a special process to control the virtual devices), and then proceed with the application program using the altered input configuration.

Figure 5. Taxonomy of Hand-Controlled Continuous Input Devices.

Cells represent input transducers with particular properties. Primary rows (solid lines) categorize property sensed (position, motion or pressure). Primary columns categorize number of dimensions transduced. Secondary rows (dashed lines) differentiate devices using a hand-held intermediate transducer (such as a puck or stylus) from those that respond directly to touch - the mediated (M) and touch (T) rows, respectively. Secondary columns group devices roughly by muscle groups employed, or the type of motor control used to operate the device. Cells marked with a "+" can be easily be emulated using virtual devices on a multi-touch tablet. Cells marked with a "O" indicate devices that have been emulated using a conventional digitizing tablet. After Buxton (1983).

Figure 6. Architecture of a Prototype Input Window Manager

The tablet poller monitors the activity on the physical device, filters redundant information, and normalizes the data points before passing them on. The normalized format allows use of a range of physical devices simply by changing the tablet poller for the specific device.

The virtual device coordinator is active if the current activity is not a trainer session. It uses the incoming tablet data and the configuration provided by a trainer session to identify the virtual device to which the incoming data belongs. It passes the appropriate information on to the device specialist (device driver) for that virtual device. The device specialist determines the effect of the input and signals the request handler appropriately.

The virtual devices are accessed by the application program through two communication routines. One routine allows the activation and deactivation of various types of event signals. The other routine accesses the event-queue, returning the specifics of the last event to be signaled. A number of requests are available to the activation routine, including discrete status checks on a device, turning the device "on" or "off" for continuous event signaling, and a utility shutdown request.

The request handler module interprets and acts on requests from the application program, altering or extracting information of the device specialists as needed. It posts appropriate events to the event queue.

Finally, the architecture is such that much of the underlying software can reside in a dedicated processor, thereby freeing up resources on the machine running the main application. This includes the part of the tablet poller, the internal representation of the current mapping of the virtual devices onto the tablet, and the virtual device coordinator.

The work described has been exploratory. Nevertheless, we feel that the results are sufficiently compelling to suggest that more formal investigations of the issues discussed are warranted. We hope that the current work will help serve as a catalyst to such research.

2 We thank Alain Fournier for first suggesting the analogy with window managers.

Buxton, W. (1983). Lexical and Pragmatic Considerations of Input Structures. Computer Graphics, (17,1), 31-37

Buxton W., Hill R., & Rowley P. (1985). Issues and Techniques in Touch-Sensitive Tablet Input. Computer Graphics, 19(3), 215 - 224.

Buxton, W., Lamb, M., Sherman, D., & Smith, K.C. (1982). Towards a Comprehensive User Interface Management System. Computer Graphics, (16,3), 99-106.

Buxton, W. & Myers, B. (1986). A Study in Two-Handed Input. Proceedings of CHI'86 Conference on Human Factors in Computing Systems, 321-326.

Evans, K. Tanner, P., & Wein, M. (1981). Tablet-based Valuators that Provide One, Two, or Three Degrees of Freedom. Computer Graphics (15,3), 91-97.

Kasik, D. (1982). A User Interface Management System. Computer Graphics, (16,3), 99-106.

Knowlton, K. (1975). Virtual Pushbuttons as a Means of Person- Machine Interaction. Proc. IEEE Conf. on Computer Graphics, Pattern Matching, and Data Structure., 350-351.

Knowlton, K. (1977a). Computer Displays Optically Superimposed on Input Devices. The Bell System Technical Journal (56,3), 367-383.

Knowlton, K. (1977b). Prototype for a Flexible Telephone Operator's Console Using Computer Graphics. 16mm film, Bell Labs, Murray Hill, NJ.

Lee S., Buxton, W., & Smith, K.C. (1985). A Multi-Touch Three Dimensional Touch Tablet. Proceedings of CHI'85 Conference on Human Factors in Computing Systems, 21 - 25.

Myers, B. (1984a), Strategies for Creating an Easy to Use Window Manager with Icons. Proceedings of Graphics Interface '84, Ottawa, May, 1984, 227 - 233.

Myers, B. (1984b), The User Interface for Sapphire. IEEE Computer Graphics and Applications, 4 (12), 13 - 23.

Pike, R. (1983). Graphics in Overlapping Bitmap Layers. Computer Graphics, 17 (3), 331 - 356.

Tanner, P.P. & Buxton, W. (1985). Some Issues in Future User Interface

Management System (UIMS) Development. In Pfaff, G. (Ed.), User Interface

Management Systems, Berlin: Springer Verlag, 67 - 79.